Fonts are a key component of embedded GUI development and we see a lot of confusion when it comes to understanding them. What's the difference between bitmap images and rendered graphics? How does text rendering impact memory usage and the quality of your user experience?

This blog covers these concepts, and more, to help you make better choices when it comes to font selection and rendering on your embedded GUI hardware. Based on one of our Embedded GUI Expert Talks, you can either read the content below or watch this video with Thomas Fletcher, Co-Founder and VP of R&D, and Rodney Dowdall, Product Architect:

You can also jump straight ahead to the question and answer portion of the session by clicking here.

Optimizing graphics memory in embedded systems

In one of our last live embedded talks, we talked about the nature of embedded images and how selecting the right image format can have a large impact on building out your embedded GUI from a performance perspective, but also in terms of what you can actually fit in the resources that you have (RAM and Flash). One of the things that we can look back on is the fact that there's a lot of similarities between fonts and images.

How font rendering works

If you think about a string that's rendered to a computer screen, that string is just a collection of individual glyphs representing each character. They're essentially mini images but they aren’t encoded the same way you’d see in a PNG image file. With a font file, it’s just the data that's needed to render them as a mini image at runtime.

Font file formats

With regular images, there are multiple formats including that include PNG, JPEG, and Bitmap. With fonts, there's a whole bunch of different formats. The two main types for embedded systems are: TrueType, developed by Apple, and OpenType, co-developed by Microsoft and Adobe.

Both font file formats contain just the data for the fonts — if the files contained the images for the glyphs too, the font file would be far to massive.

Going from font file to screen

As font files contain only raw data, your GUI application typically needs a font engine to render a font. The font engine extracts the data from the file and constructs the glyph (mini image) in memory at run time, then pushes it to the display.

When the font is rendering the first time, it's a good idea to cache the result in memory so that the next time you need to use it, your application can just grab it from memory rather than having to compute the glyph data from scratch again.

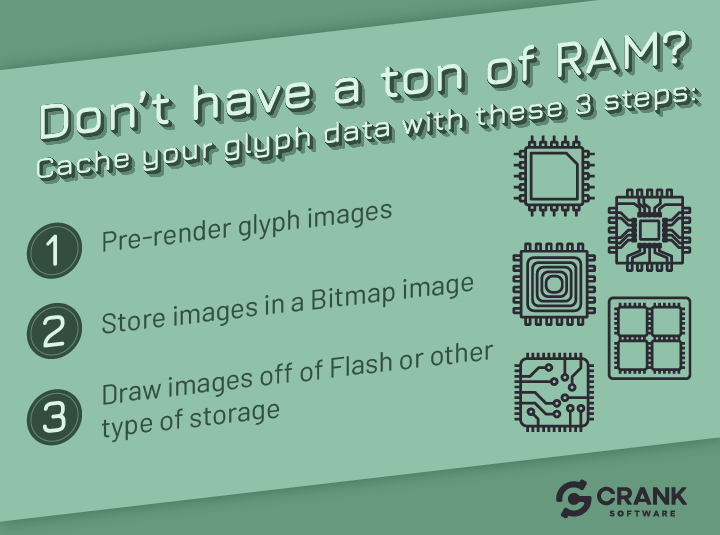

Caching and pre-rendering fonts

The use of a cache goes back to the similarity between images and fonts. In the same way we would have to decode a compressed image format, like PNG or JPEG, we would need a local cache to handle the font glyphs.

Caching the data uses RAM but if your hardware doesn't have a lot of space, you can decide to pre-render the glyph images before runtime and store them in a Bitmap image that's included as part of your application. In this way, your application can draw the fonts directly off of Flash or storage without using up precious RAM cycles. In other words, the font glyphs require no memory whatsoever — the font engine draws directly from the storage device.

It's important to note that a Bitmap image is just the alpha data so if you want to colorize your fonts, you need to store the alpha values needed to render the glyph image or mini image onto the screen.

Pre-rendering has the benefits of saving RAM and the computational costs of unpacking and rendering the font glyphs but the drawback is the storage space required for the Bitmaps.

As an example, say you have the Roboto font (an open source front provided by Google) in three different sizes: 70 point font, a 48 point font, and an 18 point font. All of these elements have to be pre-rendered ahead of time, so the size of each glyph set gets really, really big really, really fast. With three different point sizes, you're looking at approximately 5.6 megabytes of storage for that font file in Flash memory.

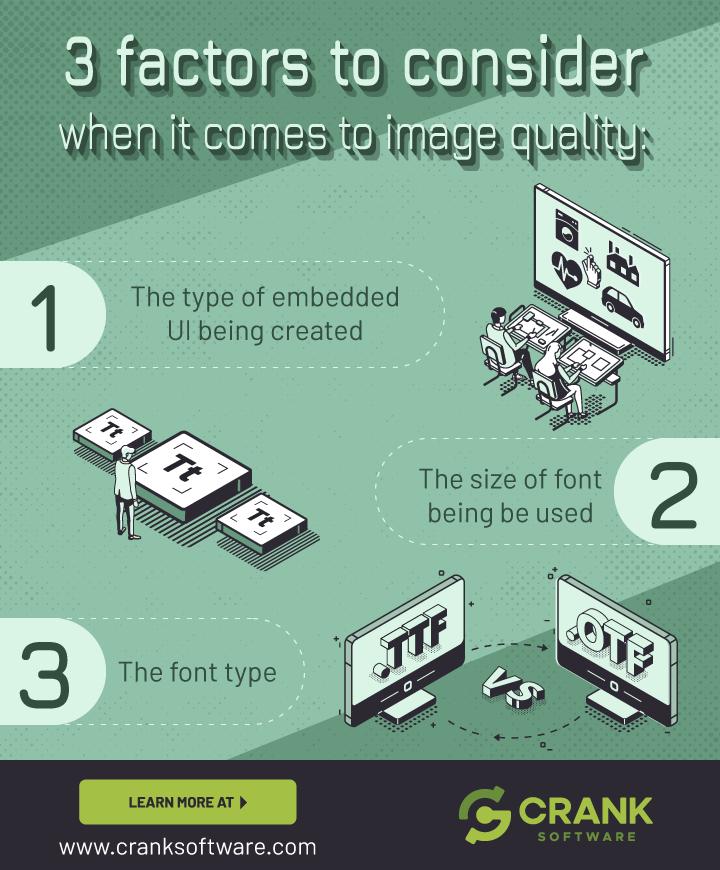

3 factors to consider when it comes to font quality

- The type of embedded GUI your development team is creating

- The size of font is being used

- The font type being used

For example, there's going to be a tradeoff in quality if your team is trying to save storage space by pre-rendering fonts. If you go from 8-bit to 4-bit alpha, you may not see too much of a tradeoff, but as you go down to 2-bit and 1-bit, there is significant quality trade-offs.

Tips to save space when pre-rendering fonts

1. Store less bits for the alpha map

The first way your embedded GUI development team can save storage space during pre-rendering is to store less bits for the alpha map. You could try 4-bit, 2-bit, and 1-bit alpha levels and see how much space is saved. Lower levels, however, reduces the crispness of your fonts, so it's always a good idea to validate the results on a simulator or hardware to see which alpha works best for your user experience.

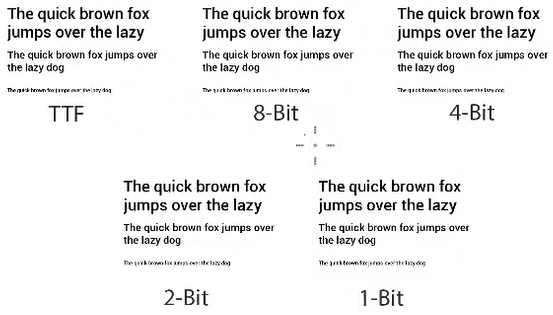

Comparison of different font alpha levels

At 6:23 in the above video, we compare the visual results of different bit depths. On the top left is a TrueType font as rendered by a font engine and stored in memory, with no pre-rendering. The other results show the 8-bit, 4-bit, 2-bit, and 1-bit pre-rendered Bitmap scenarios.

With the 1-bit depth, you lose some of the crispness and introduce jaggedness of the font around any of the rounded characters like C, O, or Q. Essentially this is the result of a 1-bit encoding giving you only an “on or off switch”, you're either drawing that pixel or not? This makes drawing rounded edges or circles really tricky.

In an 8-bit scenario, you have 255 values of alpha, allowing for nice rounded corners and a smoothness in the overall results.

What’s the difference between TTF and 8-bit alpha?

There is no difference between the TTF and 8-bit scenarios. They are exactly equivalent because a TTF generates an 8-bit alpha map. When the font engine is reading data from the font file and creating the glyph image, it's actually creating an 8-bit alpha map of that specific glyph, very similar to a pre-rendered version.

When it comes to smaller fonts or text, there are certain scenarios that are more prevalent in terms of where you see image quality loss or degradation. The quality loss depends on your embedded user interface, what size of font you're using, and what type of font you're using. It varies based on what you want to have happen in your GUI.

2. Minimize character counts to reduce space

The second way to mitigate storage space is minimizing character counts. Fonts come with a lot of characters, or a lot of glyphs in them, but your user interface may not actually need all of them.

If you were making a thermostat as your embedded GUI project, you wouldn’t need to use all of the characters — only 0 to 9 for example. That means you could strip the unused glyphs out of that font and only pre-render and store those ten characters.

Using an open source tool, like FontForge, allows you to go through and modify a font, create your own font, and take out what you don't need in your font file.

This only works if you know exactly which characters are necessary beforehand. If you don't know what kind of text you're dealing with, or if you have a dynamic GUI where text or point sizes change frequently, you must pre-render those ahead of time because the application is not going to know what it needs until it's running.

Font optimization for embedded GUI development projects

When it comes to optimizing fonts at runtime, Bitmap fonts are much faster than rendering through an engine because there's no pre-computed stage. The load time and memory look up is a lot faster.

A quick rule of thumb for choosing between runtime font rendering and pre-rendered Bitmaps: Do you have more Flash or more RAM?

- If you have more RAM: Go with a font engine rendering scenario

- If you have more Flash: Go with a pre-rendered Bitmap scenario

A consideration to think about is the overall UX design. Does it require a lot of dynamic fonts and sizes? If so, the font engine approach is probably your better strategy.

If your embedded GUI project is using dynamic text such as using internationalized languages, a variety of font types, or general dynamic behavior throughout your user interface, you couldn't pre-render it. If that’s the case, your best approach is using a font engine. In using a font engine, you will want to use the cache attributes to limit the amount of RAM so that you could still fit into small embedded devices.

How Storyboard helps understand font usage

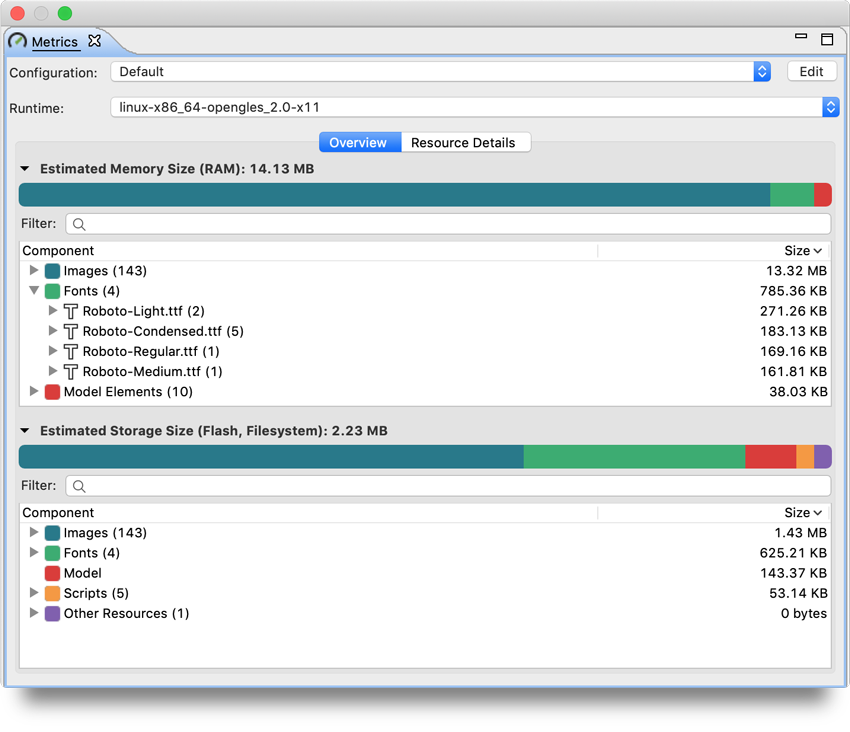

In the Storyboard embedded GUI framework, there is a metrics overview that shows how much RAM and storage space is required by different elements within the application. You can use this view to compare different font configurations and encoding to see the impacts on the overall system.

Metrics view in Storyboard

You can also set a resource limit that allows you to say “only consume X amount of RAM for my cache”, ensuring that it won't grow unbounded.

Using rendering technologies to your advantage

In terms of rendering technologies, the metrics reporting in Storyboard provides quick feedback on image costs and choices. The same goes for font costs and choices — you can see exactly how to balance the resource usage of how much RAM is being used versus how much Flash.

Audience Q&A on fonts for embedded GUIs

Are there certain rendering technologies that we can take advantage of and incorporate directly into the engine?

Answer: We can answer by looking at OpenGL versus software font rendering. In the OpenGL case, Storyboard caches the font glyphs in a texture, so we're able to look them up instead of having to rely on cache memory in a software rendering case. This requires less memory because in OpenGL, we have the texture already, and just use it without having to repeatedly create and destroy that texture.

What about 16-bit versus 32-bit displays and the impacts on overall memory consumption?

Answer: It doesn’t play much of a role in the pre-rendered font case because the image itself is just an alpha map, so it's already 8-bit, 4-bit, 2-bit, etc. The impact comes when we need to colorize. If I want a red, green, and blue font text, I'm not going to have three different copies of those glyphs. I only keep the one copy and calculate color values as we go through the individual pixels. So the 16-bit, or 24-bit, or 32-bit won’t matter here.

Does Storyboard support custom fonts or only specific font types?

Answer: When Storyboard is pre-generating fonts, Storyboard follows these steps:

- Open the font file

- Read the glyph data out of the file

- Render it into an image

- Push it out as a Bitmap font

It doesn't matter if the input font is customized or not, the output is just a Bitmap image of the alpha map data for that font. The output however is specific to our tooling and our Storyboard engine.

If I use one of the font tools you mentioned, can I create my own custom fonts that are usable by Storyboard?

Answer: Yes. You can pull things in by putting them into the font file. The typical scenario is emojis. For example, you can make an emoji font file and then push that out as either a TrueType font that the font engine understands or as pre-rendered Bitmap. The same goes for icons and other vector content too.

If you needed to have something with dynamic coloring, like an icon that can be colored green or red because there's an alert or an “okay” situation, you could totally go that route and make that a font file and then color it in the way you’d like.

Conclusion

Ultimately, fonts for embedded GUI development are flexible and similar to images. Fonts do come at that unique intersection of not only being used for text, but also for being utilized for image design elements inside embedded GUIs.

For more Embedded GUI Expert Talks like this one, check out our YouTube playlist for on-demand videos and upcoming live dates.

.png?width=180&height=67&name=Crank-AMETEK-HZ-Rev%20(4).png)