Embedded systems and UI development can be complicated. Factor in social distancing and product teams being forced to continue development remotely, and the situation has become even more complex. But it doesn’t have to be!

Thomas Fletcher, Co-Founder and VP of R&D, talks about the embedded UI development tools our clients and own team are leveraging today to connect remotely and keep embedded projects moving forward, in our first of many Embedded GUI Expert Talks with Crank.

By watching a replay of Thomas' live Youtube video or by following along the transcript below, you’ll learn about our feature update for the Storyboard IO Connector and its support for TCP/IP, and how you can leverage it to inject events over a network. This effectively decouples the need for the UI and back-end to be physically deployed in the same location.

Finding a collaborative embedded UI development environment - at home

The current situation of the world has caused a lot of teams to break out of their usual office space and work remotely, from home. For most product teams working on the design and development of embedded applications, a remote environment is a new and unknown territory to navigate. Especially when collaboration is key to developing a cutting-edge embedded UI product.

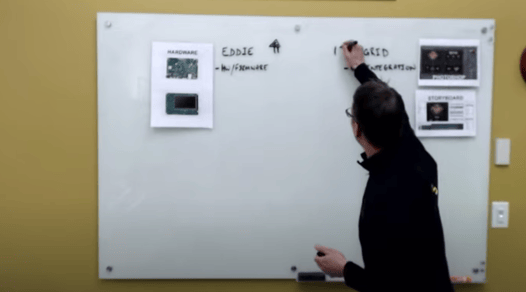

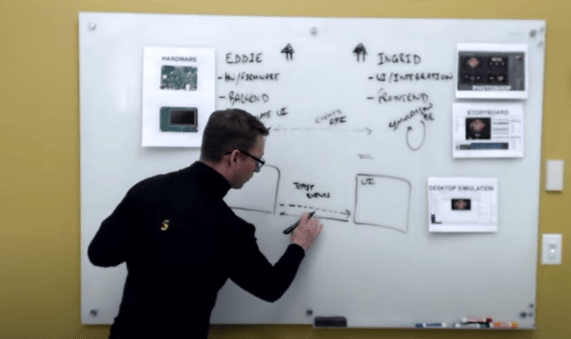

I want to take you through a scenario of two different team members that are on the same embedded UI development project but separate teams within their company, and how they can work and collaborate with one another - remotely. The two team members are Eddie and Ingrid.

Meet Eddie

Eddie is a hardware developer. Eddie is working at home, at his kitchen table with his portable embedded hardware. The UI development project is putting together a new coffee machine so he’s working on the hardware including the: I/O controls, the device drivers, and looking at how he's going to get input from the system to be able to relay that data into other parts of the system.

Thomas working on whiteboard, setting up Eddie and Ingrid's work spaces.

Meet Ingrid

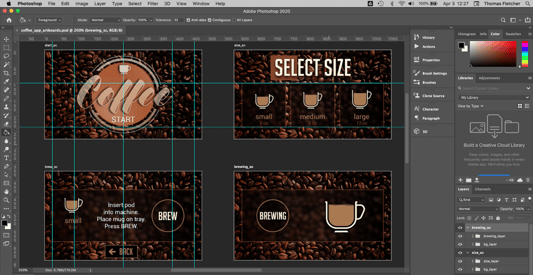

Ingrid is our other teammate in the remote development scenario. Ingrid is also working from home but has a different role than Eddie on the team. Ingrid’s role is to use her laptop to incorporate the content created by the graphic design team. Incorporating content includes Photoshop content, Illustrator content, and all of the other graphic design elements that have been put together for the coffee machine product by the UI designers. Ingrid is working on integrating the graphic designs and bringing them into the user experience, or UX, in order to reflect the reality of what the team wants to put out into the product.

Ingrid's UI graphic designs in Photoshop.

How do you collaborate with remote developers and UI designers?

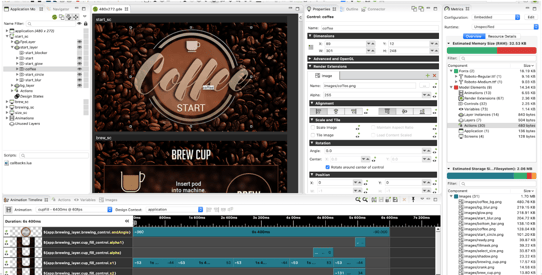

To collaborate remotely, the team chose the embedded UI design and development tool, Storyboard. Ingrid participated in the evaluation and decision process and in short, chose Storyboard to help bring Photoshop and Illustrator UI graphics and other content into the software while also having the ability to rapidly create the user interface in a desktop environment with the rest of her team. In other words, Ingrid and Eddie could work together to take the static content and put together dynamic animations, user interactions, the screens, the modalities of the application; all of those elements in the same UI development environment.

TIP - When choosing embedded UI technology, make sure the chosen software allows both the product development team and the design team to do their jobs with ease. Ie. Designers importing Photoshop graphics files while developers code in events.

Ingrid's Storyboard development environment including her Photoshop UI design files.

Remotely interacting with hardware in separate remote work locations

If Eddie, on the UI development team, and Ingrid, on the UI design team, were actually in the office working together, they most likely would be working independently. They're working on very different areas of the project so there's not a lot of tight coupling between them. But as a collaborative team, they would get together to share ideas about what’s possible for their newest project. That doesn't really change in the work at home environment.

Using shared drives and communication channels

Teams in their current remote work environment are getting comfortable using communication and engagement tools such as Slack, Google Hangouts, and Zoom to communicate with individuals and groups in their company. Thanks to these technologies, employees are able to work from home fairly effectively because we can bring our tools with us.

In the scenario I’m walking you through, Eddie and Ingrid’s teams have access to a shared repository for their teams, where all content for the UI design and development for their embedded product is kept safe. This includes whole system images and a whole cast of other elements that team members are working on alongside them.

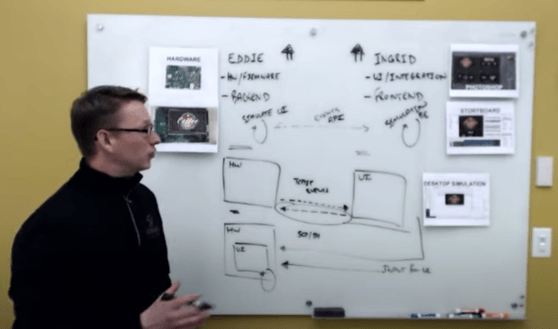

Thomas working on whiteboard, showing how the Storyboard Simulator works.

Finding an embedded design and development tool that works without hardware

When working on C development and C compilations remotely, like Eddie, interacting with this hardware is local. Because Ingrid is also working remotely, she can work in parallel with Eddie who’s working on the UI development. Ingrid can work at a desktop level because Storyboard allows team members to simulate and work in an environment that doesn't require the embedded hardware.

How does the backend and frontend communicate to one another?

Part of the essential design choices that go on from this stage of the embedded product development is answering: how do we get the backend components and our frontend talking to one another? What does that coupling look like? Similar to backend and frontend terminology in web design, being frontend typically talking with the browser, and backend talking about the server, both tend to play off the same rule in embedded development.

Backend - The backend is usually the device drivers including: the system logic and in some cases the business logic of what the application is.

Frontend - The frontend is other user experience factors, what the user is actually engaging with, touching, interacting with, etc.

Exchanging data between backend and frontend using simulation

When it comes to exchanging data back and forth between the backend and the frontend, a typical environment in an embedded capacity could be that the frontend and the backend are tied or coupled closely together. It could also be that you're using a graphics library or you're calling into C functions, and those C functions are not only manipulating the user interface but they're also communicating data from the backend to the frontend - where we're receiving data from the backend.

That type of exchange isn’t an ideal scenario when teams are working remotely. It introduces too much coupling. If we tie it back to our original scenario, Ingrid would actually have to tie directly to a source repository that Eddie would also be working with. And at the same time, they would have to synchronize their commits, ensuring they're always working in sync in that capacity as a lock step operation. Ingrid would introduce only some of the callback functions until Eddie had the functionality ready to go - or else she would have to stop.

Decoupling to work smarter

A better way of working is actually to decouple this. Decoupling means keeping the same logical operations of the backend and frontend, but instead, coupling the operations together with events. The idea is to have an event flow back and forth, giving you a contract or an API.

How do events flow when using an embedded system?

If you're using an embedded system, events could flow back and forth by using a message cue. A message cue by which the backend or hardware can communicate up to clients like the user interface. The backend is interested in data with the series of events and data payloads that represent the system state. And similarly, the UI can communicate back to the backend using a different set of events with different sets of payloads. This type of decoupling means that you're changing the transport, and decoupling it from the actual date of payloads - and the information that's being relayed.

Decoupling - Keeping the same logical operations of the backend and frontend, but instead, coupling the operations together with events that flow back and forth.

Decoupling also allows simulation to occur in a much easier fashion. With decoupling, Ingrid can work independent of what the hardware or Eddie is doing, and at the same time, Eddie doesn't need to know what the UI is actually doing with his data. He can instead have his own feedback loop with a UI simulation using the backend. Or an API contract. This is part of a good design process.

Replacing simulation using TCP/IP connections and events

At this point, Eddie and Ingrid have both been developing within Storyboard, both working through the system using simulation. Eddie's really excited about having his backend working, having live data coming from his hardware. Ingrid has the UI working using simulated backend data on her desktop. So what's the next step for them? Connecting both Eddie’s work and Ingrid’s work together.

To do so, we're going to set up a development environment where the hardware can be providing information to the desktop UI - replacing the simulated backend. This could occur with Ingrid pushing her project into a source repository and Eddie going into the repository to pull it out. But that means that Eddie now has to know how to configure the UI. Similarly, if Ingrid had the hardware, she'd have to figure out how to work on the system image.

A 4 minute Getting Started video on Storyboard using TCP/IP connection.

Instead of Ingrid and Eddie swapping positions, we can leverage the fact that there’s a network connection. Eddie and Ingrid have already been communicating together in an informal fashion as part of their collaborative, remote team. So they can extend and push that down to their embedded hardware or system that they are building by leveraging, for example, a TCP/IP channel or connection to send the events.

Tip: Use a TCP/IP connection to allow team members to collaboratively work in one channel of communication.

Using a TCP/IP connection to send events

With a little bit of configuration on Eddie's side, he can have his hardware that he’s probably connecting to remotely, redirect its I/O control over a communication channel that could then be funneled across the Internet into Ingrid's simulated UI. This is really powerful because it means that Ingrid and Eddie can work together in one communication channel, for example, Slack. They could be collaborating while Eddie is generating inputs on their application and having those inputs flow directly as they would from the hardware into the user interface. And Ingrid, as the owner of the user experience, is able to monitor the live data flowing from the hardware so she is able to validate any assumptions that were made around the event API that was previously put in place.

There's always going to be a possibility of misunderstanding or lack of clarity when working on projects remotely but the idea here is to reduce that. Ingrid can quickly jump on to UI adjustments that need to be made in her local environment, and similarly, if there's something wrong with the live data being generated, Eddie can jump into his environment. But they don't actually have to exchange any source code or change any configuration information. By doing so, the process becomes efficient and effective.

Decoupling to work in parallel

It's not just about going one way. We can have events flowing down from the hardware for the user interface in a simulated fashion. We can have a clear API and using event decoupling gives us the ability to work in parallel. At this stage, two team members are working effectively and bringing their individual elements together - but still separately. What's the next piece?

Taking that user interface and running it right on top of the hardware. This would mean that Eddie and Ingrid could be ready to start sharing their two components with the rest of their team members, for feedback. They could also start talking about pushing the source repository without any concern. Decoupling now goes into a third phase, which is running Ingrid's UI on the hardware. Or putting the UI onto the embedded display.

Now you might be thinking that this is an easy step, just taking her work pushing it out into the source repository so Eddie can pull it up. He'll then do the configuration and build it out in his end. It’s definitely possible but at that point in time, the whole team is seeing the configuration, even if they are using branches. However, if there is feedback and iterations that need to occur, it is better that Ingrid and Eddie can continue the work effectively coupled together in their independent areas rather than a hand off for tuning. If Ingrid can stay in the loop on the UI when changes need to be made and Eddie can be right there to respond, then the hardware interaction and the data going in and out is going to work. The visual representation of the UI can then be tuned accurately so that it's actually looking clear and precise on the chosen device.

Communicating with your team and embedded system is key

To make sure the visual representation of the UI is accurate on the device, Ingrid can deploy straight from her Storyboard development environment. With the network connection, she can deploy straight from her Storyboard environment all the way down to the target hardware using secure copy and SSH connections. Eddie can sit there watching the hardware or use a webcam and point it down to the hardware to continue having the Slack conversation with Ingrid while she's watching the hardware looking at the interaction. This type of transfer allows Ingrid to control what's being pushed to the embedded target while Eddie focuses on what the system is doing and what it needs to be doing. They can work collaboratively in that way and see their coffee machine project actually running on the hardware.

Thomas displaying how decoupling and events allows UI development to occur in parallel.

Using an I/O connector to generate events

Up until now, Ingrid and Eddie have been working effectively from home with the efficient design and development process they’ve put in place. But now, because Eddie was so excited about getting data from his regular I/O connectors, his sole focus, he wasn't focused on the UI. Eddie completely forgot about doing the touchscreen driver and Ingrid has already pushed her UI to the hardware. Now what? It's a touch user interface so perhaps they want to be able to not just see the data coming from their sensors into the UI but actually simulate what the user experience looks like for the coffee machine. Because it’s event-driven, we're just going to leverage the same communication channel we had going on when Ingrid was running the UI on her desktop with simulated data from the hardware - providing input to it and receiving data from it. We could take that same channel so she can generate input from the UI directly from her development environment. This works by using an I/O connector that generates events or she can provide Eddie with an automated playback file; something that allows them to take events that would normally be generated by the user touching on screen and inject them back into the user interface. These are both options that Storyboard can complete.

With embedded UI development in general, you're talking about decoupling your teams apart from one another; whether it's in the office so they can work in parallel and work effectively, or in this case, working from home, we can't actually just come over to a coworker’s desk to look and say, "Okay, can you run this on the hardware now? I want to take a look at it." It's a slightly different mode of operating but it's not actually too far off from a normal operation you'd see.

Everything Ingrid and Eddie have incorporated into their process is good practice from an embedded architectural design perspective to use decoupling to allow teams to work independently, in parallel.

Tip: Using an I/O connector within an embedded software or an automated playback file allows team members to generate events or input from the UI directly from their individual development environments.

Wrap-Up

To summarize, keep abstractions clean to allow them to be decoupled for the event-based interface. Keep options open in terms of modes in which teams can share elements of the frontend and the backend. Be able to test independently to push your way through your work and then step-wise, move your product along without necessarily engaging the whole of the team. Because at the end of all the development and design tweaks, you’ll have something that is almost product ready and prepared to go into a repository to be shared with everyone else on the project. Eddie and Ingrid can then be very confident that what they built is solid and working on real hardware. That means your own teams at home are capable of doing the same, with the proper processes and tools in place to work effectively.

For more Embedded GUI Expert Talks like this one, check out our YouTube playlist for on-demand videos and upcoming live dates.

.png?width=180&height=67&name=Crank-AMETEK-HZ-Rev%20(4).png)