Thomas Fletcher, Co-Founder and VP of R&D, talks on the specific use case of building rich UIs populated with images and graphics and how you can get all of the resources to fit in your embedded system in our Embedded GUI Expert Talks with Crank Software. Or as Thomas likes to summarize as “making good computer memory and image choices”.

By watching a replay of Thomas and Rodney's live Youtube video or by following along the transcript below, learn how to: use frame buffers to optimize image memory, understand the decode phase, use hardware accelerators and other technologies for decompression, and look at further graphics optimization solutions. If you missed part 1 on embedded graphics and memory optimization considerations, click here.

Or, jump straight ahead to the question and answer portion of the live video.

Types of Embedded UI Memory Configurations

In part 1 of this memory series in our Embedded GUI Expert series, we looked at two different types of memory configurations or the different types of memory use: Flash memory and dynamic RAM memory.

-

Flash memory:

Flash memory is usually a very abundant memory in terms of memory available in embedded systems. But because of Flash’s access characteristics, it really favors reading content often. As a result, it's used a lot for storage, your code, and the data of the program - but not a lot for writing content. It can be used as persistent storage, but its access time is very slow for writing. -

RAM memory:

RAM is very dynamic. All of your dynamic content tends to live in RAM memory, meaning content that you're accessing, reading and writing very frequently. But RAM is more costly, and as a result, it tends to be a much more limited supply.

What does the type of graphics memory have to do with the topic of embedded UI imagery? What is the relationship between graphics memory, images, and bringing them together?

When we think about how embedded UIs are being created these days, we're talking a lot more about this shift of moving from graphic primitives like fills, polygons and rectangles, to content that is being crafted in design tools including: Photoshop, Illustrator, and Sketch. That content is then being handed off to a development or engineering team typically for inclusion in an embedded UI as a series of images - for placement and content.

Creating embedded graphics in design tools and importing to Storyboard

Teams use desktop development environments and graphic design tools to develop the static representation of the UI. Then engineers take that and convert it into a live representation of the embedded user interface product. That's where a lot of the richness is coming from. It's because the graphic design tools are able to give us a lot of rich content and functionality that could be pre-processed and generated ahead of time.

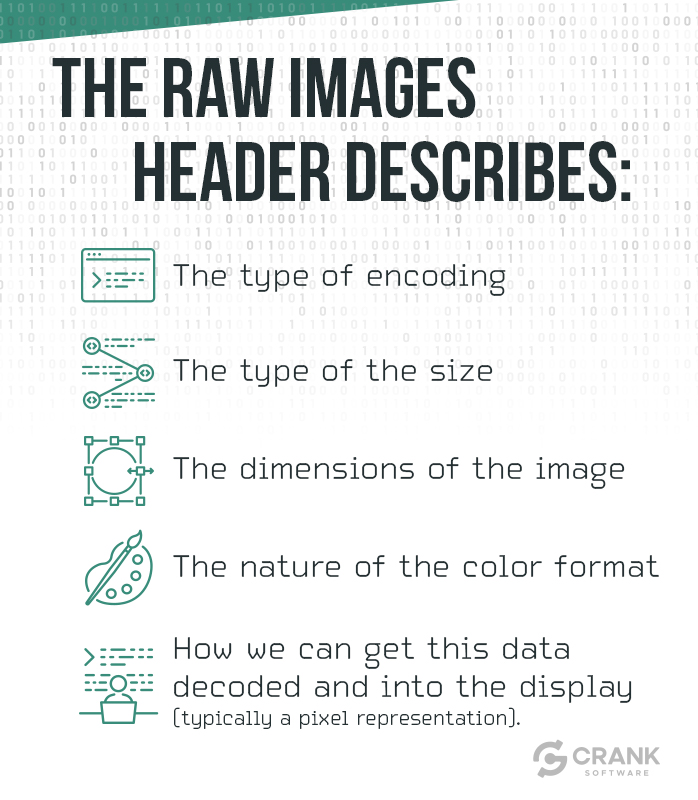

So say you or a team member is starting in Photoshop and coming into a Storyboard environment to represent your embedded graphics and images. What we're talking about when we're taking content out of a graphics design tool is taking this imagery and exporting it as a number of sliced images. So in this case, we have Photoshop content decomposed into 31 different images that are being used within our user interface, and that content is now positioned, in place, shown, hidden, animated, alpha blended, composited together - all sorts of operations are happening with it. And that's all happening in the context of your graphics design tool.

Using frame buffers to optimize embedded graphics memory

This represents a challenge. One of the things we talked about earlier with this notion is our RAM being a limited resource, and something that we typically use for display frame buffers. We have all of these images that are sitting in some sort of source format; these might be PNG images, JPGs or bitmap files, and they may even be raw images. There are a lot of different formats for how those embedded images are stored. Most of those formats that we're going to talk about are encoded format - they’re compressed. That's done so that they stay in space within the storage. We want to take this source and get it onto the display.

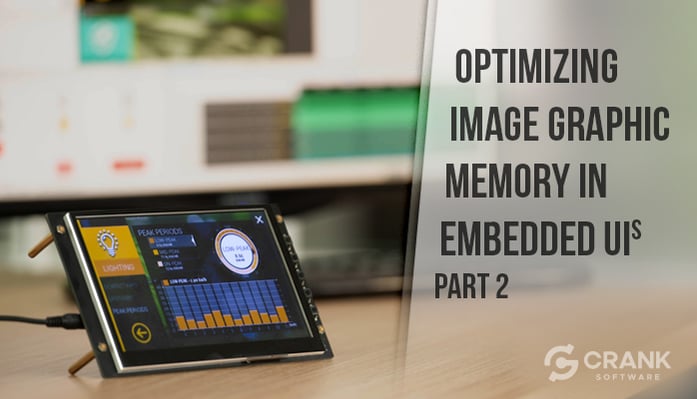

In an ideal world, we would just be able to take these raw images and move them over into the display. These raw images typically are organized, regardless of the specific format, with some sort of header followed by the pixel data of the image.

Figure 1 - what the raw images header describes

There are tons of different encodings for displays as well. You can have a palette base. You can have 16-bit or 32-bit, but generically, at the end of the day, we're really representing colors in the displays. We need to get these pixels across, ideally, to move directly from source to display. This is really an operation so our source could sit inside of our storage and can head over to the display tol be done - if only life were that simple.

Understanding the decode phase

Because we're talking about a number of different embedded graphic memory formats including: PNG or JPG, and BMP - these are encoded UI memory formats. Typically, we have to run through a decode process as we move our image source onto the image display. The decode phase is where the complication comes in. This is where making good choices about where your images are and the type of image formats comes into play because our decode can really be split into two different paths. If we have a raw format or a direct image format, meaning the data in the header is very closely related to the display content format, then when I come down this decode path we can identify that this is raw data and push it in directly into the display. What's great about this is that it is all a read operation so it’s perfectly suitable for content sitting in Flash memory. Flash memory access time for reading is very, very fast. Not as fast as RAM but fast enough for us to be able to take the content and composite it on to a display. This display is going to be sitting in RAM.

Tip: When working with a raw or direct image format, the decode phase becomes a read operation so it is suitable for content in Flash.

What about the other path? When we go through this other path, we are looking at changing the format and usually we're looking at some sort of decompression where I need to take the encoded format which is being shrunk down. There's lots of different types of encoding formats like lossy encoding formats and lossless encoding formats. There are complicated ones that are optimized for minimizing the footprint of the image. Then there are other compression formats that are optimized for decodes. Regardless, this decompression has to go into a temporary buffer. We're going to call this our scratch buffer and it’s going to give us a place to expand the image into a native format or direct format that we can push into the display. Once we go through this scratch buffer, we could take that scratch buffer and push it directly to split.

Using hardware accelerators and other technologies for image decompression

There are some technologies and hardware accelerators that will actually allow us to do decompression and push it out to the display all in one stop. Specialized hardware can definitely help with this, but regardless there's always a requirement to put this scratch buffer into RAM because we need to read and write from it. We’ll read from it when wanting to go to the display. But when we go and do the decompression, we're going to be taking the source bits of the image and will write them into a buffer. We need that to be accessed relatively quickly so this is a RAM memory cost for us. For example, if I get a PNG image that I exported from Photoshop, it may be fantastic. I have a very small footprint in terms of storage. But now, in order for that image to get up on the display, I have to go through this decompression phase that will take a little bit of time and memory.

Typically an engine or an application will look at this and say, let's minimize the CPU time that we're using for decompression. We don't want to go through and decompress that over and over and over again. So, what we'll do is cast the results or the scratch buffers. The scratch buffer ultimately ends up being the full size of the image as it would be on the final display, and we do that so that we can circumvent this decompression stage and go directly out to the display after we've done a depression image once. In terms of resource management strategies around how big my cache is, when do I evict images out of it, what's the life cycle around this? A lot of complexity goes into strategy. That's all so that we can have this balancing act between using RAM for images and being able to balance the storage costs.

Tip: Using a scratch buffer ends up being the full size of the image as it would be on the final display. We do that so that we can circumvent the decompression stage and push out directly to the display after we've done a depression image once.

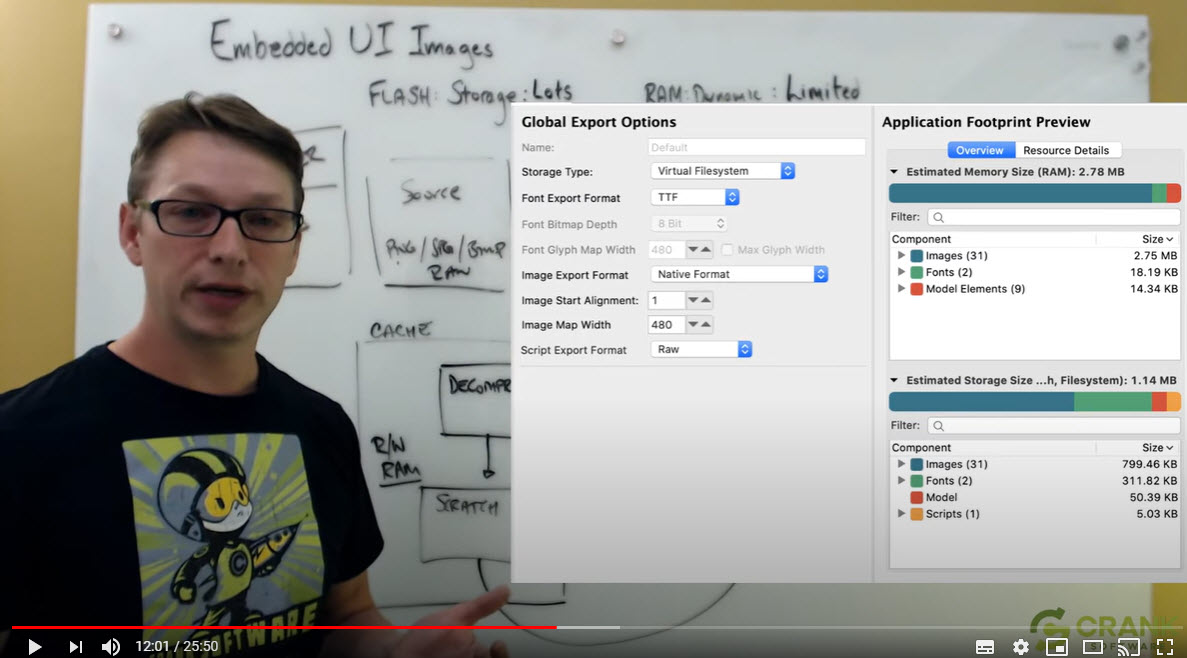

Working in Storyboard to view system memory metrics

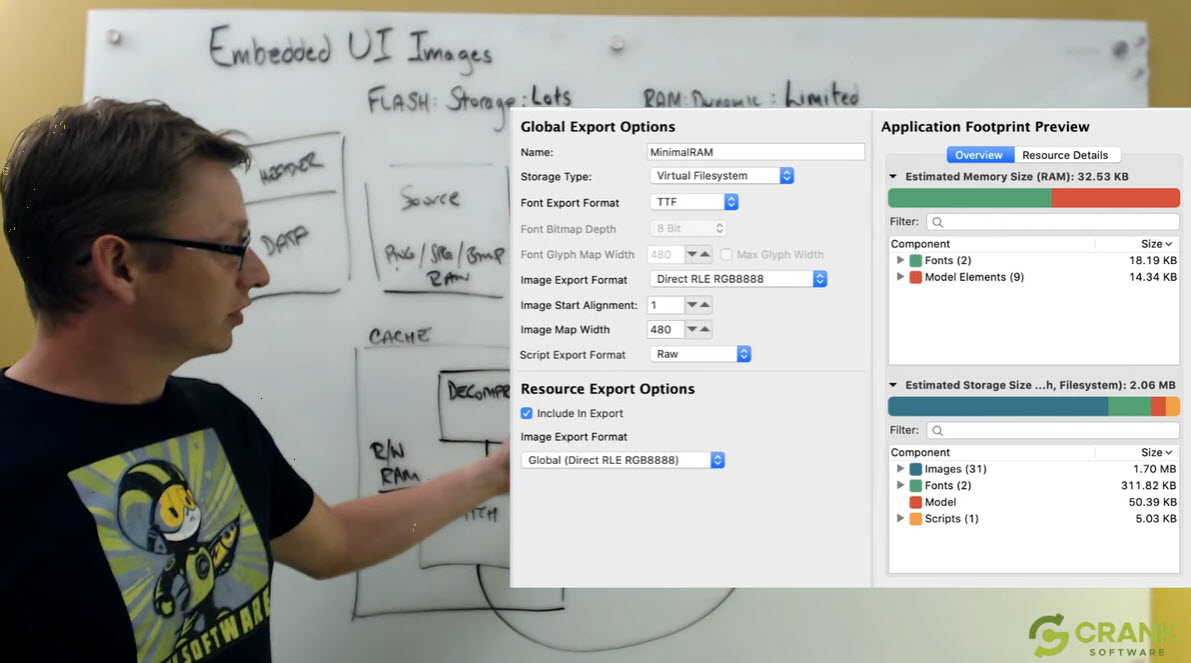

Let's take a look at what this type of scenario would look like if we had Photoshop content, to pull in. We have a tool inside of Storyboard that allows you to actually decompose your images and get a measure for both storage costs and dedicated graphics memory costs that are going to be incurred. So if you’re watching the video, you’ll see that Photoshop content came in and we have 31 files or 31 images that are going to be used. Not all of those images are going to be live on every screen at all times. We'll have different modalities and such where a cache would come in terms of effectiveness.

But you can see here, if I take a look at the number, we have uncompressed 2 almost 3 megabytes of memory that's going to be required. If I'm working on a low-end platform, an MCU type of platform where I may have a limit of 1 mg or 2 mgs and most of that is being occupied by my frame buffer, our 2840 by 272 display here is using half a meg of memory on its own. We want to manage these resources.

Can you shift the balance from needing to do all of this in memory in RAM or can I shift the computer memory to be a read-only Flash memory?

Storyboard gives you the ability to change the encoding of these source images that you're using with the design tool on the fly during the export process. As an embedded graphics designer and an engineer working together, what we can do is we can continue to build a rich user interface with all of these images but then we can compensate and see how we’re actually going to do the target resource management in terms of Flash and RAM sizes to operate it with. If I change all of the images from the encoded images (that was the default when you grab them from Photoshop) to being raw or direct images and you can see what we're doing is converting them all to be raw 888 pixels. So 24-bit, we can take this code path here, we don't need any of this RAM, so that 2.75 megabytes of RAM disappears right off of our metrics usage. And now what we can see is that it shifts into the storage cost. The storage costs go up, but the storage costs are one to one with what we use in our display costs. And because typically we’ll have an abundance of Flash, within limits and reason, that's an okay trade off.

Managing dedicated graphics resources with further optimization

Using run length encodings

There are other choices that can be made if we wanted to further optimize this. What we could do is look at a decode strategy that allows us some level of compression, perhaps not as much compression to some of the more sophisticated algorithms, but gives us the ability to do a raw direct display. These are run length encodings.

Run length encodings can be very efficiently implemented as if you're doing direct draws because it's really a pixel color followed by a count. So if I turn that on, you'll see that my storage costs drop dramatically. I think our storage costs come down to a little below 2 megabytes, so 1.7 megabytes which is right down. We’ve saved almost an entire megabyte by doing this compression, but we're not using any additional RAM because we're able to continue down this raw direct, draw path. These are choices you can make in your design tool when you're building your embedded user interfaces to manage image resources.

Tip: Using run length encodings doesn’t use any additional RAM because the compression process continues down a raw and direct draw path.

Using a hybrid solution

Now we're talking as if my only choice is all in Flash or all in RAM, but there's a hybrid approach as well. Depending on the rendering technology that you're using (and Storyboards supports a variety of different image formats), you can mix and match these scenarios together. So what I can do is propose a hybrid solution. My hybrid solution allows me to leverage the RAM that I have. Maybe what I've decided is that in my system configuration, I can't fit all of the images because I need a certain amount of dynamic memory for my display, I need a certain amount of dynamic memory for my variables and my stacks, my general heap, general operations, etc. But I am willing to commit a certain amount of memory to cache so I can put some of my content in Flash using a direct format and then other content I could put into RAM, decompress it, and maintain it all here.

Why use both Flash memory and RAM memory for optimizing computer memory? What are the benefits?

The benefit is that even though Flash is perfectly suitable for reading in terms of access time, it is still slower than RAM. And with every image that’s going to be composited, you're going to be reading through and running through those images. If I can get a little bit faster access time from RAM, then by all means, that's what I'd like to do. The other piece that comes into this is our access time on Flash when we're doing a lot of series of small little accesses. It's not always as fast in terms of the total throughput as it could be. So, if I can put a larger image into Flash and get a long run of Flash read access, then I'm going to really hit my maximum performance on the Flash side. This is important because our total scene involves rendering a number of different images, compositing together and putting them into the final display.

If I look now at this hybrid scenario, what I've done is gone through and identified individual images. The images were full background images or nearly full display images, and I said let's take those images and put them into Flash, let's do the early encoding to keep their size minimal, and let's take the other content (the series of smaller images that we have, the series of pieces that are used in animations, little glows and pops, etc) and leave them in their compressed format in RAM. Now that's not to say you couldn't also put them into a raw format and then put them into RAM, but that’s a little bit wasteful because now I’m going to have the full image source here, and the full image source here, and I might as well do that one time decompression and put it into a cache and manage it that way.

Live Q&A on Optimizing Image Graphics Memory

When it comes to UIs with animated screen transitions, how much does it impact the buffer?

Answer: There are two different ways to look at animated screen transitions. One is an animation where you're animating the content of a single display buffer, constantly recompositing that scene during the animation and then what you're doing is saying, okay, I'm finished with that, now I'd like to single step effectively and jump into the next screen. And in that case, what happens is it's the same scenario, same cost as compositing one display. It's just you're doing an instantaneous transition at the end of your animation to the new display and you're pulling in new content.

Where are there any costs when it comes to optimizing image graphics?

Answer: For new images that may be compressed and need to be decoded, there is a decompression stage and most UI technologies are going to give you a mechanism, as a first approach, by which you can preload content. If you know what's coming or you know that there's a significant decompression cost, you can build that into your startup time or mitigate it during the operation.

The second animation approach is to really animate two separate distinct screens or displays and again, without getting into too much detail or specific to any particular hardware, there’s lots of different ways to do that. Sometimes you can use two display buffers in which case you would composite one display buffer and then composite the other display buffer. All the same rules would apply in terms of your cache or your resource management. Then, those two display buffers would actually be animated and move together. So, if you think of a fade in or fade out, you might be able to leverage hardware for that, in which case the hardware will actually work with buffers directly and you won’t have to recomposite the scene. So it's really a scenario by scenario situation. But in terms of overall costs, it's the same as if you had gone from one display to the next and any sophisticated cache management strategy is going to take that into consideration and know essentially the context of your operation - and know where you are in your steps so that content that's being removed from the cache isn't immediately required back in the cache.

Is there a general rule of thumb for the required amount of Flash, RAM and the buffer size that you need for a UI application?

Answer: There is no rule of thumb. But there is basic math that you can do - frame buffers are probably the easiest. Your frame buffer requirements are going to be likely summarized as the width and height of your display multiplied by your color bit depth. Here, I'm assuming a scenario where we're doing direct draw and not palette-based. We would need to have an entire other session when we talk about palette-based images and palette-based displays, and what the benefits and drawbacks are there. So, if I'm looking at 480 by 272 on our display, if I'm a 32-bit color 24-bit color, and I have a full 888 for my RGB - red, green and blue - I'm looking at something just under 500K for this. If I go to 16-bit, so 5-6-5 color format or 5 bits 6 bits or 5 bits for my red, green and blues, I decimate the number of colors. I chopped the frame buffer or memory requirements in half, so those are a big savings.

How does it affect the rest of your calculations downstream?

Answer: These calculations depend on your source images. You can decimate your source images or you can decimate them as you decompress them by putting in the RAM. Ideally, if you're going into a raw format, they're going to be decimated at source.

How does Storyboard handle frame buffers?

Answer: Storyboard will allow you to take a look at the sum and total of all of your screens and provides a summary of all the resources used altogether as if everything was being used in one shot. The summary includes details on image resources, font resources, and your script resources. It leaves the frame buffer display calculations up to you because you might want to be single buffered, unlikely. Double buffered, more likely, or even triple buffered in some scenarios. With that calculation and cost basically taken care of, you can say, “well that would be my ultimate high watermark center.” But realistically, you have different screens that have different content because you're unlikely going to be using all of your graphical assets in one shot or one scenario. Instead, Storyboard will actually break out the metrics for you so you can see how much memory any individual screen is. Storyboard gives you the ability to identify the resources that are used on various screens so that you get a better idea of your ultimate high watermark and then your realistic operating watermark in terms of a screen by screen basis.

Wrap Up

I really wanted to give you a bit of a discussion point or overview on why it is that making good, smart choices around the types of images that you're using, and the image formats that you're using can have a significant impact on your system. Embedded UIs today are using far more graphical resources than can comfortably fit entirely into RAM. Ideally, they can all fit into Flash in their native format, but even when they can't, we can compress them down and we have a variety of techniques to give us a lot of richness and a lot of variety for the embedded user interface through the use of images.

For more Embedded GUI Expert Talks like this one, check out our YouTube playlist for on-demand videos and upcoming live dates.

.png?width=180&height=67&name=Crank-AMETEK-HZ-Rev%20(4).png)