In today's world, touchscreens are everywhere, be it our mobile phones, car dashboards, smart home appliances, or industrial automation; we rely on touch control panels for the majority of our tasks. A commendable user experience plays a vital role in gaining market shares and attracting customers; hence, manufacturers compete to make this experience effortless and more enjoyable to the users.

But is this enough to attract customers?

As technology evolves, so do the users' requirements and expectations of an intuitive yet distinctive interface; introducing a voice or gesture-based UI can help distinguish a brand from the rest.

Voice & Gesture are the Emerging Trends

There has been a meteoric rise of voice assistants, for example - Amazon's Alexa, Google Assistant, and Apple's Siri. Well, it's not the complete list - Higher-end automotive manufacturers like Tesla, Mercedes, and BMW also use voice assistants to enhance the driving experience.

Adding voice assistance to the cars provides a hands-free and intuitive way to interact with devices. Voice-based UIs have improved accessibility for users with physical limitations or visual impairments. Enabling voice assistants helps individuals with mobility challenges to easily control their environment.

Several factors contribute to the quality of voice interactions, including connectivity, processing speed, environment, reading errors, and interpretations of accents. The areas where this technology can be applied are in smart home devices, cars, gaming, and medical fields.

.gif?width=800&height=400&name=Gesture%20control%20illustration(1).gif)

Gestural UI is so far used in the gaming, automotive HMI, and medical HMI industries. An example of this is - Apple's Vision Pro, where one can navigate and use the apps through eyes, hands, and voice. Users can even arrange apps anywhere and scale them to the perfect size. The Apple VR Pro is a prime example of the latest advancements in gesture-based UI, pushing the boundaries of innovation.

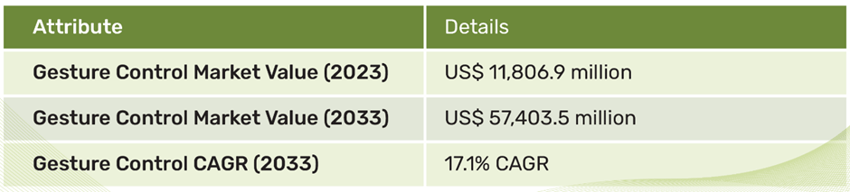

As per the report from Future Market Insights, the Global demand for Gesture-based UI is projected to increase at a CAGR of 17.1% during the period of ten years from 2023 to 2033, reaching a total of US $ 57,403.5 million in 2033.

The Application of Gestures Control in the IoT Segment

Gestures have found a compelling application in IoT scenarios, redefining the way we interact with smart devices. With just a wave, you can adjust the lights to your preferred brightness, adjust the thermostat to the perfect temperature, and play music. This seamless interaction is made possible through gesture recognition technology when integrated into IoT devices. Indeed, it holds lots of potential in this industry for the future.

One of its examples is Google Nest Hub which employs gesture control, allowing users to play or pause media, skip tracks, and even dismiss alarms just with a wave. These applications enhance user convenience and contribute to energy efficiency, as devices respond directly to user presence and preferences, exemplifying the potential of gestures in shaping the future of IoT.

How does Gesture-based UI work?

Gesture-based UI, or Gestural User Interface, captures and interprets the user's physical movements to interact with electronic devices. These systems rely on sensors and cameras to detect movements, followed by using algorithms for gesture recognition. Once the user's gesture is identified, it is mapped to a specific command or action.

For instance, a swipe to the right will open the next screen, and a pinch gesture will zoom in on an image. The recognized motion triggers the associated action, allowing users to control devices naturally and intuitively. In touchless and immersive environments, this technology provides users with an engaging and interactive experience. However, it also presents challenges, such as ensuring precise recognition and addressing potential user learning curves. Despite these challenges, gesture-based UI continues to evolve and find applications in various devices, from smartphones to gaming consoles, enriching user interactions with technology.

Read why touchless gestures should matter to embedded GUI development teams.

The Significance of Voice and Gesture Control

Enhanced User Experience

A direct and tactile link between users and devices is made possible via touch, voice, and gesture control. As an alternative to traditional input methods like buttons or remote controls, and touch screens, a seamless and delightful user experience is made possible by the responsiveness of voice and gesture recognition technologies. This guarantees that actions are carried out in real-time. Voice and gestures simplify interactions such as scrolling through a web page, playing a game, zooming in on a map, or turning the pages of an electronic book.

Aesthetic Benefits

Embedded products with voice and gesture interfaces often boast sleek and minimalist designs. The absence of physical buttons or knobs saves space and allows for more streamlined and aesthetically pleasing product aesthetics. This is particularly relevant in applications like Car’s dashboards, and IoT devices where touchscreens create a modern, uncluttered appearance.

Accessibility and Inclusivity

Individuals with disabilities can benefit from voice and gesture interfaces. Adding features such as voice commands, touch magnification, and gesture shortcuts can empower users with various physical and cognitive impairments to interact with embedded products independently.

What Lies Ahead?

The future of embedded GUIs is an exciting frontier characterized by the transformative potential of gesture-based interfaces. Gesture recognition technology is rapidly evolving, and experts believe they still need to scratch the surface of its capabilities. With time, they can anticipate a leap in responsiveness, eventually reaching a point where systems can predict user actions before they occur. Different industries will harness gesture-based UI for specific applications. In the automotive HMI sector, implementing Gesture recognition will enhance driver safety by enabling hands-free control of infotainment systems. The demand for touchless interactions, driven by health and hygiene concerns, will fuel the adoption of gesture-based UI. This technology will find applications in public kiosks, medical devices, and more, reducing the need for physical contact.

Read how Aptera Motors built a gesture-based automotive GUI in less than a year.

Industries across the spectrum are harnessing this innovation. In gaming, GUI-enhanced software is pushing boundaries by incorporating gaze-tracking and even telepathic-like interactions, resulting in more immersive virtual reality experiences. Meanwhile, the healthcare sector is adopting advanced GUI devices designed for high-risk environments. These devices streamline processes and reduce the risk of germ transmission, a crucial aspect in healthcare settings.

For embedded GUI teams, now is the opportune moment to embrace touchless gestures as a pivotal element in their development toolkit. A company that takes this leap gains a competitive edge when offering an intuitive and engaging user experience.

Never tried Storyboard before?

Grab the complimentary 30-day trial and experience it firsthand!

.png?width=180&height=67&name=Crank-AMETEK-HZ-Rev%20(4).png)